Smart things (public account: zhidxcom)

Author |Xiong Dabao

Edit |Li Shuiqing

Smart Things reported on April 15 that Google recently announced the launch of AR indoor navigation function, which provides users with route guidance in scenarios such as airports, transit stations and shopping centers, and helps users find the nearest elevators and escalators, boarding gates, and platforms , Baggage claim, boarding counter, ticket office, restroom, ATM, etc.

This AR indoor navigation technology breaks through the limitations of traditional indoor navigation of flatness and low legibility, enhances image accuracy and data versatility, and not only brings users a novel and personalized experience of indoor navigation, but also provides offline navigation. Industries such as retail bring potential application value.

This article will analyze AR indoor navigation from three aspects: AR’s breakthrough in the legibility of indoor navigation maps, the transformation of AR navigation from outdoor to indoor, and the development prospects of AR indoor navigation.

1. AR breakthrough in indoor navigation: two-dimensional leap to three-dimensional, and map legibility is improved

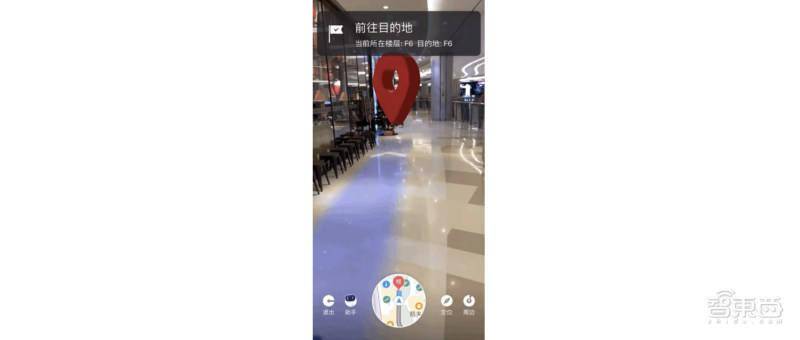

Based on AR indoor navigation, the user’s own location and floor can be identified, and then AR overlay guidance such as arrows and information markers can be used to reach the destination.

Although these instructions seem simple, they cannot be achieved by previous indoor navigation methods such as indoor maps, Bluetooth beacon positioning, Wi-Fi signal positioning, and GPS positioning.

Indoor map is the most traditional one, such as the retrieval map of the library bookshelf. This method collects a large amount of data and makes an index map, and readers can navigate and find through the exclusive number of each book. However, the production and expression of maps for large-scale indoor environments is costly, and its universality is not high.

The Bluetooth beacon location method is more popular. Relying on Bluetooth, the smart phone software can roughly get the user’s location on the map for route planning and navigation. However, due to the short transmission distance of Bluetooth, it will have extremely high deployment and maintenance costs in large indoor environments such as shopping malls and office buildings.

Another solution is to locate and navigate based on Wi-Fi signals. However, due to the complexity of the indoor environment, Wi-Fi signals are easily changed by environmental changes, so maintenance costs are still high, and accuracy is also restricted by factors such as Wi-Fi router deployment density, environmental stability, and training duration.

Although the GPS positioning method is widely used, it has poor signal penetration and low positioning accuracy. It is only suitable for two-dimensional planes. It can determine the approximate location of the user but cannot determine the direction. It cannot play a role in large-scale buildings.

▲The GPS signal bounces off the facade of the building

The AR indoor navigation launched by Google this time is equipped with a new visual positioning system (Visual Positioning System; VPS), which can make up for the deficiencies of the above methods.

Google AR indoor navigation function (Indoor Live View) relies on VPS technology to determine the user’s indoor location, and combines the virtual route with the actual indoor scene through visual processing.

The visual positioning system VPS is different from GPS. It does not rely on signals for positioning, but on images.

First, engineers can create localized maps through VPS. This method is called simultaneous localization and mapping (SLAM). By taking a series of images with known locations and analyzing visual features, VPS can create maps and establish fast search indexes, such as buildings, bridges, etc.

Secondly, when the user needs indoor navigation and positioning, the VPS will match the camera lens view with the VPS index view, and use the mobile phone’s built-in inertial measurement unit (IMU) data such as the camera, gyroscope, accelerator, and gravity sensor to use motion Artificial intelligence algorithms such as tracking and environmental perception are compared and analyzed to determine the location and direction of the mobile phone user relative to the real world.

Finally, in terms of orientation presentation, VPS can superimpose label effects such as guidance arrows and subtitle information onto the real images collected by the camera in real time, and rely on light estimation technology to better integrate virtual content into the real world. The light estimation technology allows the mobile phone camera to perceive the current environment’s lighting conditions and perform color correction on the virtual image, thereby enhancing the sense of reality when the virtual and real images are combined.

2. The indoor transformation of AR navigation: the improvement of image accuracy and data versatility

Since 2019, Google has launched the AR navigation function (Live View). Relying on the VPS visual positioning system and Google Street View gallery, it helps users to obtain accurate navigation routes outdoors. In subsequent updates, users can also identify surrounding landmarks through AR navigation, allowing users to know their location in the city more clearly.

How difficult is it to go from outdoor navigation to indoor navigation?

Large-scale indoor scenes such as exhibition halls, museums, shopping malls, and transportation hubs are complex and large in scale, and there are many areas of untextured space and visual ambiguity. The collection, production and expression of map data is a major difficulty. At the same time, with changes in lighting and viewpoints, it is also a challenge for users to accurately and quickly relocate. Google has many technological breakthroughs.

The first technological breakthrough is the improvement of image accuracy. More than 10 years ago, Google began to introduce street view maps, where users can have a “human eye view” map browsing experience, but at first these street view data were not localized. Image data localization can add more annotative label information to pictures of objects, locations, etc., to help users quickly understand.

In order to use VPS to achieve global localization, Google uses information collected and tested from more than 93 countries around the world to connect it with Street View data. This rich data set provides trillions of powerful reference points through triangulation to help people more accurately determine the location of devices and guide people to their destinations.

▲Google triangulation method to accurately locate buildings

Although this method works well in theory, there are still challenges in practice. The problem is that the real-time image of the mobile phone may be different from the scene when the street view is collected during localization. For example, the details of trees will change with the seasons, or even with the magnitude of the wind, and a good match cannot be obtained.

In order to break through this technology, Google uses machine learning to filter images of temporary and changeable things in the scene, focusing on images of fixed structures in the scene. This technological advancement also makes today’s AR indoor navigation more accurate.

Another technological breakthrough is the rapid acquisition of data.

Airports, shopping malls, hospitals… The numerous buildings mean that Google needs to shoot a large number of indoor images of public scenes. In the previous outdoor street view database construction, Google has gradually improved its image collection methods from early street view vehicles, street view tricycles, street view trolleys, and then to the convenient and lightweight street view backpack (Trekker).

▲The old street view backpack on the left and the new one on the right

Below the street view backpack is the main body of the backpack, and the top is a spherical device equipped with 15 5 million pixel cameras. With this kind of backpack, image collectors can take pictures of corners where cars can’t get in.

As a result, in the image collection of indoor public scenes, Google can use its own street view backpack to send a large number of contractors through an airport, station or shopping mall in a certain place to quickly capture VPS data.

Google said that AR indoor navigation will first be launched in some shopping malls in Chicago, Long Island, New York, Los Angeles, Newark, New Jersey, San Francisco, San Jose, California and Seattle. In the coming months, it will also be launched at airports, shopping malls and transit stations in Tokyo and Zurich.

However, there are still some weak links in the development of AR indoor navigation, such as focusing on product development and landing but insufficient understanding of users and functional requirements, map information expression pursuing visualization and realistic effects but lacking purpose and rationality, and related hardware for presentation applications. Development is lagging behind and so on.

3. The development prospects of AR indoor navigation: scene expansion, use increase, equipment extension

At present, AR indoor navigation technology is accelerating its development. In addition to Google, domestic Baidu and AutoNavi are also exploring technology research and development and application scenarios.

Take Baidu as an example:

In November 2016, Baidu launched the AR walking navigation function. The navigation interface includes elements such as compass, route, turn indicator, way point and destination bubble, roller guidance enhancement, route overview, etc., with voice reminders to enhance the user’s walking navigation experience. In April 2017, Baidu added “Tutu”, an AR navigation cartoon character, to enhance the fun of AR guidance through big-eyed cute objects. In December 2020, Baidu Maps and Baidu Brain DuMix AR-visual positioning service platform launched the AR indoor navigation function-AI indoor communication.

AI indoor communication provides users with high-precision 6DoF positioning capabilities through visual positioning and augmenting service (VPAS). VPAS technology uses image-based 3D reconstruction technology to construct high-precision geometric maps of shopping malls, and then uses scene understanding algorithms and 3D vision positioning algorithms to calculate the user’s accurate location in real time. The actual position accuracy can reach 1 meter in the actual complex environment. Currently, scenes such as Beijing Qinghe Wanxianghui, Beijing Shuang’an Shopping Center, and Shanghai 2049 Emerald Park Shopping Center have been launched. At the same time, the technology can also be used in scenarios such as industrial inspections and logistics sorting.

Although AutoNavi and Sogou are relatively mature in outdoor walking AR navigation and in-vehicle navigation technology, they have not yet found their involvement in AR indoor navigation.

In addition to major map factories, SenseTime is also exploring AR indoor navigation. The SenseMARS Mars Mixed Reality Platform of SenseMARS, jointly built by Zhejiang University-Shangtang Technology 3D Vision Joint Laboratory and SenseTime Product Team, uses sparse point cloud map technology to reconstruct high-precision 3D maps, and calculates the current device’s 6DoF pose through IMU sensors. , Combined with SLAM technology to achieve indoor accurate positioning and AR navigation.

In the future, relying on the development of AI and IoT technologies, AR indoor navigation will allow users to see the digital attributes of the physical space, such as store virtual signs, promotional information, number of people in the mall, store traffic and other real-time information. Users can perceive in real time whether restaurants need to queue up and which products are on sale in trendy stores through their mobile phones. At the same time, combined with user portraits, AR indoor navigation will bring users a personalized offline scene experience. In terms of AR presentation, indoor navigation devices will also be expanded from mobile phones to AR glasses, AR headsets and other hardware to solve the dizziness and eye fatigue caused by mobile phones displaying AR maps for users.

It is worth mentioning that the report “Virtual (Augmented) Reality White Paper 2021” of the China Academy of Information and Communications Technology stated that AR relying on Inside-out technology is becoming more mature, and tracking and positioning will present integrated vision cameras, IMU inertial devices, depth cameras, event cameras, etc. The development trend of multi-sensor fusion. AR indoor navigation will develop well with the joint participation of the industry and academia.

Conclusion: AR indoor navigationWill become a new trend in the development of the industry

In terms of the manifestation of maps, AR technology has unparalleled advantages in the integration of people, maps, and the environment. Therefore, when Google launches AR indoor navigation, to a certain extent, it may set off a new trend in the development of AR indoor navigation at home and abroad.

It can be seen from the layout of big map manufacturers such as Baidu, AutoNavi, and Sogou that Baidu is one step ahead, launching AR indoor navigation functions in some shopping malls in Beijing, Shanghai, Guizhou, Langfang and other places, and will continue to make efforts in this field in the future. . Gaode Maps followed closely, and has upgraded the AR walking navigation function this year, and may soon enter the exploration of indoor scenes.

In the current industrial Internet era, AR indoor navigation will, with the support of AI and IoT technologies, activate the space utilization rate of large indoor scenes, broaden industry boundaries, and bring more possibilities to many smart industries. We look forward to the full coverage of all indoor public scenes in the world by AR navigation in the future.

source:Ars Technica, Google Blog, Code Swift,CNKI, Baidu

You must log in to post a comment.