Xiao Xiao from the concave temple

Qubit Report | Public Account QbitAI

Just because he looked like a thief, he was “caught” by facial recognition and sent to jail for 30 hours.

The basis of the face recognition system is only a driver’s license photo.

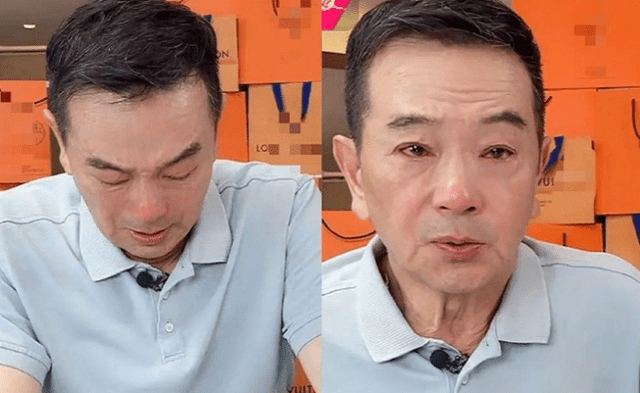

This happened in 2019. The wrongly arrested object was a black man named Robert Williams. He was released on bail after paying $1,000.

According to a report in Wired magazine, this may be the first known case of its kind.

And until now, this case has not been formally filed with the federal government.

The first case of face recognition error

Things have to start three years ago.

In 2018, a thief stole a few famous watches in a Shinola retail store and was photographed by the store surveillance.

The image captured by the surveillance was very blurry, and the thief did not look up at the surveillance during the entire process.

In this case, the police used facial recognition technology and locked 42-year-old Robert Williams with only a driver’s license photo.

In 2019, the police asked a security guard in the store with his photo. The latter confirmed it was him, but the security guard did not actually witness the incident.

At the time of his arrest, Robert Williams had just returned home from get off work and was taken away by the police in front of his wife and daughter. During this time, he was not asked if he had an alibi.

Afterwards, he lay on the dirty concrete floor in the prison for 30 hours.

Robert Williams was not released until he paid a bail of $1,000.

Now, the American Civil Liberties Union (ACLU) and the University of Michigan School of Law formally file a federal lawsuit on behalf of Robert Williams.

Judging from the litigation documents, this is the first case of illegal arrest in the United States due to incorrect facial recognition.

But what Robert Williams wants is more than just compensation.

He hopes that this case can modify the relevant policies to stop the abuse of facial recognition technology.

Robert Williams himself stated:

Just because of the problem of the machine algorithm, he was arrested in front of his wife and daughter, hoping that this kind of thing will not happen to other people again.

More than one similar case

But in fact, face recognition “caught” the wrong person several times after that.

The second cause of wrongful arrest due to facial recognition was also a black man, Michael Oliver, who sued the police department last year.

According to NJ Advance Media, the same thing happened in New Jersey. After the police arrested a black man named Nijeer Parks with facial recognition, he was detained in jail for 10 days before they found out that the wrong person was caught.

During this period, the police did not test fingerprints, did not test DNA, only relying on the face recognition system to lock the suspect.

Currently, Nijeer Parks is still appealing, and New Jersey has also banned the use of this software.

However, it is understood that thousands of law enforcement agencies are already using facial recognition technology to find criminals.

Face recognition systems are often not so reliable.

In the 2017 UEFA Champions League, the British police adopted a facial recognition system and issued a total of 2470 alarms with an error rate of 92%.

The facial recognition software used by the US police usually only relies on billions of social media photos to identify criminal suspects.

Technically speaking, people cannot determine the uniqueness of a suspect from face recognition.

Should I use face recognition?

Face recognition technology has been adopted by the US police for more than 20 years.

But researchers from MIT and NIST found that this technology is relatively better for white male recognition, which is also related to the number of samples in the face recognition data set.

For other races, the recognition effect trained on this type of data set is often not ideal.

More than just data sets.

Another problem with face recognition is that surveillance cameras compress video images, causing patterns such as skin, veins, and moles used to distinguish suspects to be removed, damaged, or deformed.

In fact, due to the different lighting conditions of the captured video, some artifacts may be mistaken for one of the facial features (such as moles).

The most important thing is that the AI model algorithm used in face recognition is very poor in interpretability and lacks empirical data to support forensic opinions.

Currently, Internet giants such as Microsoft, IBM and Amazon have pledged not to sell facial recognition systems to the police.

But the face recognition technology itself is in a somewhat awkward position.

On the one hand, the privacy and accuracy issues associated with technology, on the other hand, are the convenience and commercial benefits it brings.

Even the cameras of the domestic sales department have been aimed at passers-by for “identification”.

Where and how to use face recognition is still open for discussion.

You must log in to post a comment.