Author | ThoughtWorks

Edit | Tina Technology Radar is a technology trend report that ThoughtWorks releases every six months. It keeps track of how interesting technologies are developed, which we call items. The technology radar uses quadrants and rings to classify it. Different quadrants represent different types of technologies, and the rings represent our maturity assessment. After six months of tracking and precipitation, ThoughtWorks TAB (ThoughtWorks Technical Advisory Committee) produced the 24th technical radar for technicians based on our practice cases in multiple industries. Analyze more than one hundred technical items, explain their current maturity, and provide corresponding technical selection suggestions. 1 Four themes in this issue Platform team that accelerates product launch More and more organizations are adopting the concept of platform team to develop products, that is, setting up a dedicated team to create and support internal platform functions, and use these functions to accelerate application development, reduce the complexity of operation and maintenance, and shorten the time to market time. This increasingly mature approach is welcome, so as early as 2017, we introduced this technology to technology radar. But as maturity increases, we find that organizations should avoid using some anti-patterns when applying this technology. For example, “using one platform to rule everything” may not be the best choice. “Building a big platform in one step” may take several years to deliver value. In line with the original intention of “Once it is built, there will be people using it”, it may be a huge waste in the end. On the contrary, using product thinking helps to understand the services that each internal platform should provide based on the needs of product customers. But if the platform team is allowed to only solve the problems submitted in the technical support ticket system, then this approach will produce the old-fashioned operation and maintenance island team, and the corresponding disadvantages of demand priority imbalance, such as slow feedback and response, and Competing for scarce resources and other issues. In addition, we have also seen some new tools and integration models emerge to effectively divide teams and technologies. The convenience of integration overwhelms the single best solution We have seen corresponding developer-oriented tool integrations on many platforms (especially in the cloud field). For example, artifact libraries, source code control, CI/CD pipeline, wiki, and similar tools. Development teams usually manually select these tools and stitch them together as needed. Having a combined tool stack can bring more convenience and less work to developers, but this tool set rarely represents the best solution. Too complicated to get into the radar In the nomenclature of technical radar, the final state of the discussion of many complex topics will be “TCTB-too complex to enter the radar (too complex to blip)”. This means that those items cannot be categorized because they exhibit too many pros and cons, as well as a large number of nuances regarding suggestions, applicability of tools, and other reasons, which prevents us from summarizing them in just a few sentences. . These topics usually appear every year, including monorepos, distributed architecture orchestration guidelines, branch models, and so on. Like many topics in software development, there are too many trade-offs to provide clear advice. Identify the architectural coupling context In software architecture, how to determine an appropriate level of coupling between microservices, components, API gateways, integration centers, front-ends, etc. is a topic that is discussed in almost every meeting. It can be seen everywhere that when two pieces of software need to be connected together, architects and developers are struggling to find the right level of coupling. We need to spend time and energy to understand these factors, and then make these decisions according to local conditions, instead of hoping to find a general but inappropriate solution. 2 First look at some of the quadrant highlights Platform Engineering Product Team (Adopted) As pointed out in one of the topics of this issue of Radar, the industry has accumulated more and more experience in the “platform engineering product team” that creates and supports internal platforms. Teams in the organization use these platforms to accelerate application development, reduce operational complexity, and shorten time to market. As the adoption rate increases, we are becoming more and more aware of the good and bad patterns of this approach. When creating a platform, it is important to clearly define the customers and products that can benefit from it, rather than building it out of thin air. We not only have to beware of the layered platform team, it retains the existing technology islands, but is just labeled as the “platform team”, and we must also be careful about the work order-driven platform operation model. When considering how to best organize the platform team, we are still loyal supporters of the concept of team topology. We believe that the platform engineering product team is a standard method and an important enabler of high-performance IT. Cloud sandbox (experimental) As cloud services become more and more common, and creating cloud sandboxes becomes easier and can be applied on a large scale, our team is therefore more inclined to use a completely cloud-based (relatively local) development environment. Reduce maintenance complexity. We found that the tools used to simulate cloud-native services locally limited developers’ confidence in the build and test cycle, so we focused on standardized cloud sandboxes instead of running cloud-native components on development machines. This approach can better enforce the application of infrastructure as code practices, and provide developers with a good adaptation process in the sandbox environment. Of course, this shift is also risky, because it assumes that developers will be completely dependent on the availability of the cloud environment and may slow down the speed at which developers can get feedback. We strongly recommend that you adopt some lean governance practices to manage the standardization of these sandbox environments, especially in terms of security, access control, and regional deployment. Homomorphic encryption (evaluation) Complete homomorphic encryption (Homomorphic encryption) refers to a type of encryption method that allows direct calculation operations (such as search and arithmetic operations) on encrypted data. At the same time, the calculated result still exists in encrypted form, and can be decrypted and displayed later. Although the homomorphic encryption problem was raised as early as 1978, the solution did not appear until 2009. With the improvement of computer computing power and the emergence of easy-to-use open source libraries such as SEAL, Lattigo, HElib and partial homomorphic encryption in Python, the application of homomorphic encryption in real-world applications has truly become feasible. Those exciting application scenarios include privacy protection when outsourcing computing to an untrusted third party, such as computing encrypted data in the cloud, or enabling a third party to aggregate the intermediate results of homomorphic encrypted federal machine learning . In addition, most homomorphic encryption schemes are considered safe for quantum computers, and efforts to standardize homomorphic encryption are also underway. Although homomorphic encryption currently has many limitations in terms of performance and supported computing types, it is still a technology worthy of our attention.

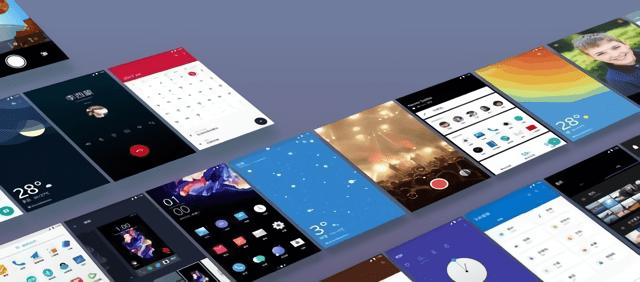

Sentry (adopted) Sentry has become the default option for many teams. Sentry provides some convenient functions, such as error grouping and using appropriate parameters to define error filtering rules, which can greatly help deal with a large number of errors from end-user devices. By integrating Sentry into the continuous delivery pipeline, you can upload source code mapping files to debug errors more efficiently, and it is easy to trace which version of the software produced these errors. We appreciate that although Sentry is a SaaS product, its source code is public, so it can be used for free in some smaller use cases and self-hosting. imgcook (evaluation) imgcook is a software as a service product under Alibaba. It can generate front-end code from different types of visual drafts (Sketch/PSD/static pictures) with one click through intelligent technology. imgcook can generate static code. If you define a domain-specific language, it can also generate data binding module code. The technology has not yet reached the perfect level. Designers need to refer to certain specifications to improve the accuracy of code generation (hereafter The adjustment of the developer is still needed). We have always been very cautious about magic code generation, because in the long run, the generated code is usually difficult to maintain, and imgcook is no exception. But if you limit it to a specific context, such as a one-time event advertising page, this technique is worth a try. AWS CodePipeline (suspended) Based on the experience of multiple ThoughtWorks teams, we recommend that you use AWS CodePipeline with caution. Specifically, we found that once the team’s needs go beyond the scope of a simple pipeline, this tool becomes difficult to use. Although the first time you use AWS, it seems like you won a “quick victory”, but we suggest you take a step back and evaluate whether AWS CodePipeline can meet your long-term needs, such as the fan-out and fan-in of the pipeline, or more complicated Deployment, and test scenarios with special dependencies and trigger conditions.

Snowflake (experimental) Since Snowflake was mentioned on the radar last time, we have gained more experience in its use and data mesh as an alternative to data warehouses and data lakes. Snowflake continues to impress people in terms of time travel, zero-copy cloning, data sharing and its application market. Snowflake has not appeared in any place that we dislike, so our consultants prefer to use it compared to other options. Amazon’s data warehouse product Redshift is moving in the direction of separating storage and computing, and this has always been Snowflake’s strengths. If you use the Spectrum feature in the Redshift product for data analysis, you will feel that it is not so convenient and flexible, partly because it is constrained by Postgres (although we also like to use Postgres). Federated queries may be the reason for using Redshift. In terms of operation, Snowflake’s operation will be simpler. Although BigQuery is another option and very easy to operate, Snowflake is a better choice in a multi-cloud scenario. We have successfully used Snowflake on GCP, AWS and Azure. Feature Store (evaluation) Feature Store is a data platform for machine learning, which can solve some of the key problems we currently encounter in feature engineering. It provides three basic functions: (1) Use managed data pipelines to eliminate conflicts between new data and data pipelines; (2) Catalog and store feature data to promote the discoverability of features across models And synergy; (3) Continuously provide characteristic data during model training and interference. Since Uber disclosed the Michelangelo platform, many organizations and start-ups have established their own signature libraries; such as Hopsworks, Feast, and Tecton. We see the potential of Feature Store and recommend careful evaluation. Self-developed infrastructure as code (IaC) products (suspended) Those products supported by the company or the community (at least in the industry) are constantly evolving. But sometimes organizations tend to build frameworks or abstractions on top of existing external products to meet very specific needs within the organization, and believe that this adaptation will have more benefits than their existing external products. We found that some organizations tried to create self-developed infrastructure as code (IaC) products based on existing external products. They underestimated the amount of work required to continuously evolve these self-developed solutions based on their needs. Soon they will realize that the original version of the external product on which it is based is much better than their own product. In some cases, the abstraction built on the external product even weakens the original function of the external product. Although we have witnessed some successful cases of organizing self-developed products, we still recommend that this approach be carefully considered. Because this will bring a workload that cannot be ignored, and a long-term product vision needs to be established in order to achieve the desired results.

Combine (adopted) We moved ReactiveX (a series of reactive programming open source frameworks) a few years ago to the “adoption” ring of the technology radar. In 2017, we mentioned RxSwift, which can apply reactive programming to Swift-based iOS development. Since then, Apple has launched its own reactive programming framework in the form of Combine. For apps that only support iOS 13 and later, Combine has become the default choice. It is easier to learn than RxSwift and integrates well with SwiftUI. If you want to convert an existing project framework from RxSwift to Combine, or use both in a project, you can learn about RxCombine. Kotlin Flow (experimental) The introduction of Kotlin coroutines opened a door to Kotlin’s innovation-Kotlin Flow, which is directly integrated into the coroutine library, is one of them. Kotlin Flow is an implementation of responsive flow based on coroutines. Unlike RxJava, stream is a Kotlin native API, similar to the familiar sequence API, including map and filter methods. Like sequences, streams are “cold”, which means that the values of the sequence are constructed only when needed. All these features make the writing of multi-threaded code easier and easier to understand than other methods. It is foreseeable that converting a stream into a list through the toList method will become a common pattern in testing. River (evaluation) The core of many machine learning methods is to create a model from a set of training data. Once the model is created, it can be used repeatedly. However, the world is not static, and usually models need to change with the emergence of new data. Simply retraining the model can be very slow and expensive. Incremental learning solves this problem, it makes it possible to learn incrementally from the data stream, thereby reacting to changes more quickly. As an added benefit, computing and memory requirements are lower and predictable. We have accumulated good experience in the implementation based on the River framework, but so far, we need to add verification after the model is updated, sometimes manually.

You must log in to post a comment.