Heart of the Machine Report

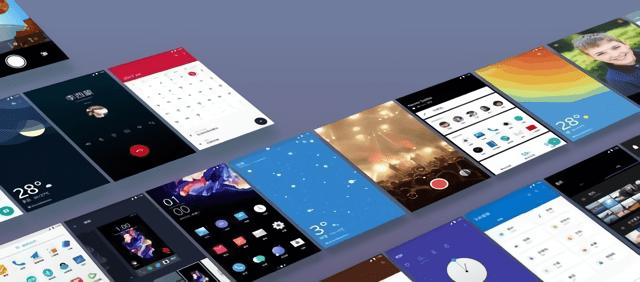

Edit: Dimensions Microsoft Swin Transformer, which slaughtered major CV tasks, recently open sourced the code and pre-training models. Since Google proposed Transformer in June 2017, it has gradually become the mainstream model in the field of natural language processing. In the recent period, Transformer has even started its own cross-border journey and began to show its talents in the field of computer vision. A number of new Transformer-based models have emerged, such as Google’s ViT for image classification and Fudan, Oxford, Tencent SETR and other institutions. As a result, “Is Transformer a omnipotent?” has once become a hot topic in the machine learning community. Not long ago, researchers from Microsoft Asia Research proposed a hierarchical visual Transformer calculated through shifted windows, which they called Swin Transformer. Compared with the previous ViT model, Swin Transformer has made the following two improvements: First, it introduces the hierarchical construction method commonly used in CNN to build a hierarchical Transformer; second, it introduces the idea of locality, which is for windows that do not overlap. Perform self-attention calculations within the area. Link to the paper: https://arxiv.org/pdf/2103.14030.pdf First look at the overall workflow of Swin Transformer. Figure 3a shows the overall architecture of Swin Transformer, and Figure 3b shows two consecutive Swin Transformer blocks. The highlight of this research is the use of moving windows to calculate the representation of hierarchical Transformers. By limiting the self-attention calculation to non-overlapping partial serial ports, it also allows cross-window connections. This hierarchical structure can be flexibly modeled on different scales and has the linear computational complexity of the image size. Figure 2 below shows the workflow of calculating self-attention using moving windows in the Swin Transformer architecture:

The characteristics of the model itself enable it to achieve a very competitive performance in a series of visual tasks. Among them, an image classification accuracy rate of 86.4% was achieved on the ImageNet-1K data set, and 58.7% of the target detection box AP and 51.1% of the mask AP were achieved on the COCO test-dev data set. At present, on the COCO minival and COCO test-dev data sets, Swin-L (a variant of Swin Transformer) has achieved SOTA in both target detection and instance segmentation tasks.

In addition, on the ADE20K val and ADE20K datasets, Swin-L also implements SOTA in the semantic segmentation task. Open source code and pre-trained model Not long after the Swin Transformer paper was published, Microsoft officially released the code and pre-training model on GitHub recently, covering image classification, target detection, and semantic segmentation tasks. Only two days after the launch, the project has received 1,900 stars.

Project address: https://github.com/microsoft/Swin-Transformer First, the image classification task, the accuracy results of Swin-T, Swin-S, Swin-B and Swin-L variant models on ImageNet-1K and ImageNet-22K data sets are as follows:

Secondly, the target detection task: The results of the variant models of Swin-T, Swin-S, Swin-B and Swin-L on the COCO target detection (2017 val) dataset are as follows:

The final semantic segmentation task: The results of Swin-T, Swin-S, Swin-B and Swin-L variant models on the ADE20K semantic segmentation (val) dataset are as follows. Currently, Swin-L has achieved 53.50% of the SOTA verified mIoU score.

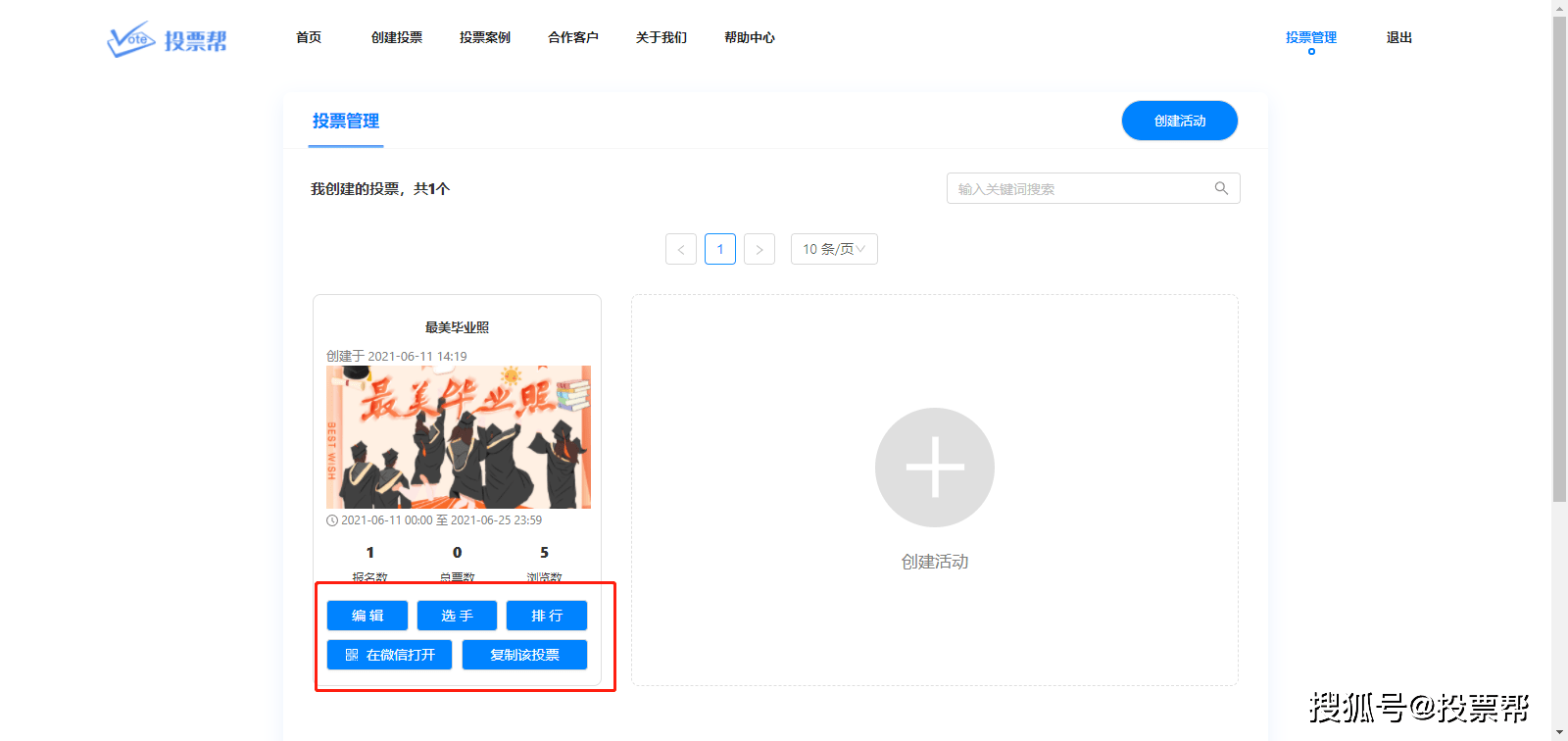

Build new, see wisdom – 2021 Amazon Cloud Technology AI Online conference April 22, 14:00-18:00 Why do so many machine learning loads choose Amazon Cloud Technology? How to achieve large-scale machine learning and enterprise digital transformation? “Building New · Seeing Wisdom-2021 Amazon Cloud Technology AI Online Conference” is led by Alex Smola, vice president of global artificial intelligence technology and outstanding scientist of Amazon Cloud Technology, and Gu Fan, general manager of Amazon Cloud Technology Greater China Product Department, and more than 40 heavyweights Guests will give you an in-depth analysis of the innovation culture of Amazon cloud technology in the keynote speech and 6 major conferences, and reveal how AI/ML can help companies accelerate innovation. Session 1: Amazon Machine Learning Practice Revealed Session 2: Artificial Intelligence Empowers Digital Transformation of Enterprises Session 3: The Way to Realize Large-scale Machine Learning Session 4: AI services help the Internet to innovate rapidly Session 5: Open Source and Frontier Trends Sub-venue 6: Intelligent ecology of win-win cooperation Which topic are you more interested in in the 6 major conference venues?

You must log in to post a comment.