Heart of the Machine released

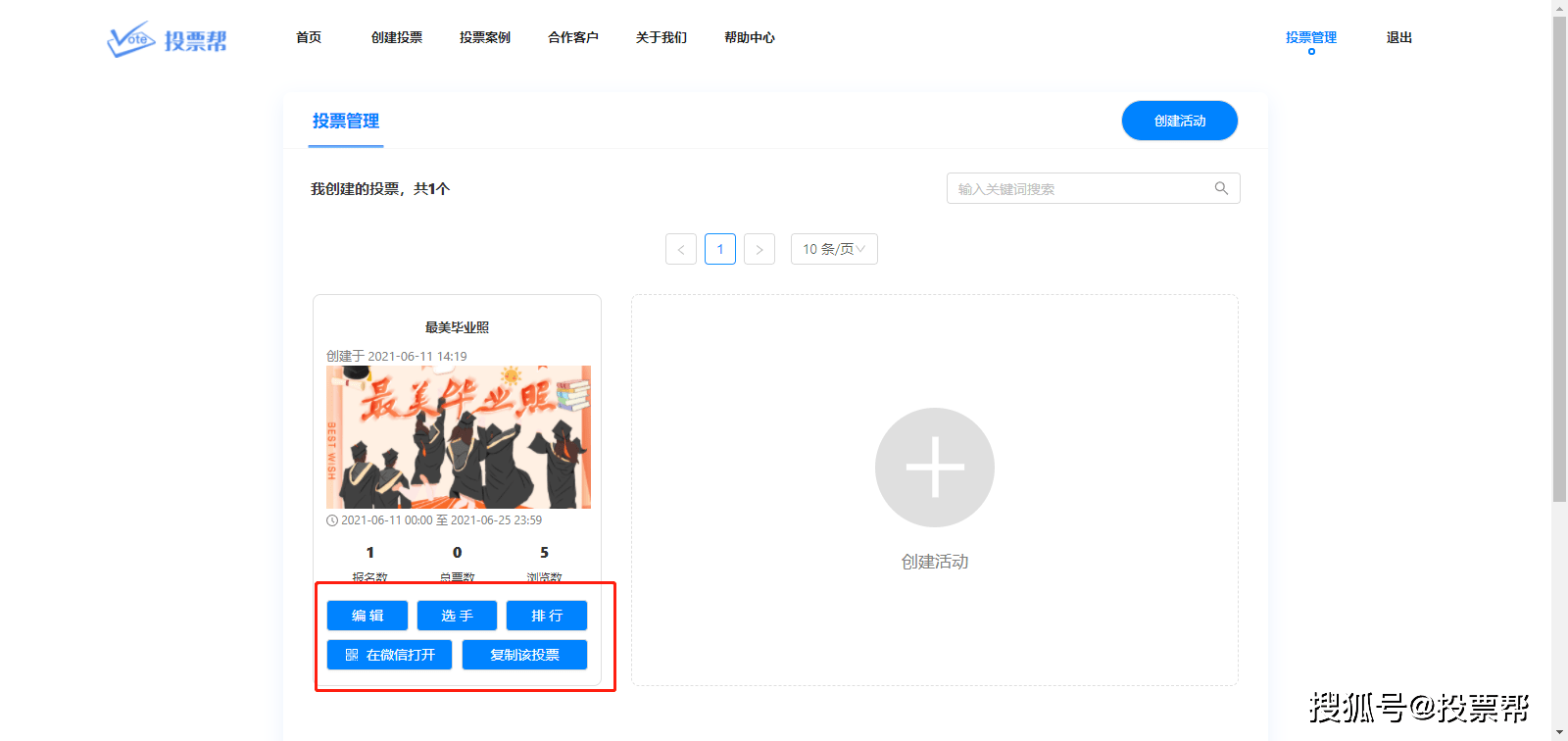

Heart of the Machine Editorial Department Yuncong Technology Speech Group proposed a semantic error correction technical solution based on the BART pre-trained model. It can not only correct common spelling errors in ASR data, but also correct common sense errors, grammatical errors, and even some that require reasoning. Errors are corrected. In recent years, with the development of automatic speech recognition (ASR) technology, the recognition accuracy has been greatly improved. However, there are still some errors that are very obvious to humans in the ASR transcribing results. We don’t need to listen to the audio, we can find out only by observing the transcribed text. The correction of such errors often requires some common sense and grammatical knowledge, and even the ability of reasoning. Thanks to the recent development of unsupervised pre-training language model technology, error correction models based on plain text features can effectively solve such problems. The semantic error correction system proposed in this paper is divided into two modules: encoder and decoder. The encoder focuses on understanding the semantics of the output text of the ASR system, and the design of the decoder focuses on the use of standardized vocabulary to re-express. Link to the paper: https://arxiv.org/abs/2104.05507 introduction Text error correction is an important method to improve the accuracy of ASR recognition. Common text error correction includes grammatical error correction and spelling error correction. Because the error distribution of the ASR system transfer is quite different from the above error distribution, these models are often not suitable for direct use in the ASR system.Here, Yuncong Technology Speech Group proposed a pre-training model based on BART [1] The Semantic Error Correction (SC) technical solution can not only correct common spelling errors in ASR data, but also correct some common sense errors, grammatical errors, and even some errors that require reasoning. In our experiments with 10,000 hours of data, the error correction model can relatively reduce the error rate (CER) based on 3gram decoding results by 21.7%, achieving an effect similar to RNN rescoring. Using error correction on the basis of RNN re-scoring, a further 6.1% relative reduction in CER can be achieved. The error analysis results show that the actual error correction effect is better than the CER indicator shows. model 1) ASR semantic error correction system design The ASR semantic error correction process is shown in Figure 1. The semantic error correction module can be directly applied to the first pass of the decoding result as an alternative to the re-scoring module. In addition, it can also be connected to the re-scoring model to further improve the recognition accuracy.

Figure1 ASR system with integrated semantic error correction model 2) Baseline ASR system The baseline acoustic model structure selected by the author is pyramidal FSMN[2], Training on 10,000 hours of Mandarin audio data. The WFST used in the first pass of decoding is composed of 3gram language model, pronunciation dictionary, dual phoneme structure and HMM structure. 4grams and RNNs are used in the rescoring, and the training data is the reference text corresponding to these audios.Acoustic model and language model use Kaldi tools [3] training. 3) Semantic error correction model structure The semantic error correction model proposed by the researcher is based on Transformer [4]Structure, it contains 6 layers of encoder layer and 6 layers of decoder layer, and the modeling unit is token. In the training process using the Teacher forcing method, the text output by the ASR is input to the input side of the model, and the corresponding reference text is input to the output side of the model. The input embedding matrix and the output embedding matrix are used for encoding, and the cross entropy is used as the loss function. In the semantic error correction model inference process, beam search is used for decoding, and the beam width is set to 3.

Figure 2 Semantic error correction model based on Transformer experiment 1. Error correction training data preparation The training set of our baseline ASR model is 10,000 hours of Mandarin speech data, which contains about 800 transliterated texts. The side test set consists of 5 hours of mixed voice data, including Aishell, Thchs30 and other side test sets. In order to fully sample the error distribution identified by the ASR system, we adopted the following techniques when constructing the error correction model training data set: Use a weak acoustic model to generate error correction training data. Here, 10% of the speech data is used to train a small acoustic model separately to generate training data; Add disturbance to the MFCC feature, and randomly multiply the MFCC feature by a coefficient between 0.8 and 1.2; Input the noisy features into the weak acoustic model, take the first 20 results of beam search, and filter the samples according to the typos rate threshold. Finally, we pair the filtered decoding results with their corresponding reference texts as the error correction model training data. By decoding the full audio data and setting the threshold at 0.3, we obtained about 30 million error correction sample pairs. 2. Input and output presentation layer In the semantic error correction model, the input and output text use the same dictionary. But the typo in the input text contains more semantics than its standard usage, while the output text only uses the standard words to express. Therefore, the independent representation of the tokens on the input and output sides is more in line with the needs of error correction tasks. The results in Table 1 prove our inference. The experimental results show that when the input and output embedded matrix share the weight, the error correction model will bring negative effects. When the input and output tokens are represented independently, the CER of the system can be reduced by 5.01%.

3. BART vs BERT initialization Here, the researcher pre-trains the language model technology, and transfers the semantic knowledge learned from the large-scale corpus to the error correction scene, so that the error correction model obtains better robustness and universality on a relatively small training set.化性. We compare random initialization, BERT[5]Initialization and BART[1]Initialization method. During the initialization process, because the BART pre-training task and model structure are the same as Transformer, the parameters can be reused directly.In BERT initialization, both the encoder and decoder of Transformer are applicable to the first 6-layer network parameters of BERT[6].

The results in Table 2 show that BART initialization can reduce the typo rate of the baseline ASR by 21.7%, but the improvement of the BERT-initialized model relative to the random initialization model is very limited. We push this may be because the structure of the BERT and the semantic error correction model and the training target are too different, and the knowledge has not been effectively transferred. In addition, the error correction model corrects the output of the language model after re-scoring, and the recognition rate can be further improved. Compared with 4grams, RNN re-scoring results, CER can be relatively reduced by 21.1% and 6.1%, respectively.

4. Error correction model vs large language model Generally speaking, the ASR system uses a larger language model to obtain better recognition results, but it also consumes more memory resources and reduces decoding efficiency. Here, we add a large number of crawlers or open source plain text corpus on the basis of the speech data reference text, and newly train 3gram, 4gram and RNN language models, and call them the big language model. The one used in the baseline ASR system is called a small model. By comparison, it is found that the recognition accuracy of adding error correction on the basis of a small model surpasses the effect of using a large model alone. In addition, by using semantic error correction on the basis of a large model, the recognition rate can be further improved.

Some examples of error correction are as follows:

5. Error analysis In error analysis of 300 examples of failed corrections, we found that the actual effect of semantic error correction is significantly better than the CER indicator evaluation. About 40% of errors hardly affect semantics, for example, some transliterated foreign names or place names There are many ways of expression, some personal pronouns lack context, which will cause the mixed use of “her, other, and other”, and some are substitutions of modal particles that do not affect semantics. In addition, 30% of errors are not suitable for correction based on pure text features due to insufficient contextual information. The remaining 30% of errors are caused by insufficient semantic understanding or expressive ability of the semantic error correction model.

to sum up This paper proposes a BART-based semantic error correction model, which has good generalization and consistently improves the recognition results of multiple ASR systems. In addition, the researchers verified the importance of independent representation of input and output in the task of text error correction through experiments. In order to more fully sample the ASR system identification error distribution, this paper proposes a simple and effective error correction data generation strategy. Finally, although the semantic error correction method we proposed has achieved certain benefits, there is still room for optimization, such as: 1. The introduction of acoustic features helps the model identify whether there are errors in the text and reduces the false touch rate. 2. Introducing more contextual information can eliminate some semantic ambiguities or missing information in the text. 3. Adapt to vertical business scenarios to improve the recognition accuracy of some professional terms. references [1]M. Lewis et al., “BART: Denoising Sequence-to-Sequence Pre-training for Natural Language Generation, Translation, and Comprehension,” 2019, [Online]. Available: http://arxiv.org/abs/1910.13461. [2]X. Yang, J. Li, and X. Zhou, “A novel pyramidal-FSMN architecture with lattice-free MMI for speech recognition,” vol. 1, Oct. 2018, [Online]. Available: http://arxiv.org/abs/1810.11352. [3]D. Povey et al., “The Kaldi Speech Recognition Toolkit,” IEEE Signal Process. Soc., vol. 35, no. 4, p. 140, 2011. [5]J. Devlin, M.-W. Chang, K. Lee, and K. Toutanova, “BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding,” 2018, [Online]. Available: http://arxiv.org/abs/1810.04805. [6]O. Hrinchuk, M. Popova, and B. Ginsburg, “Correction of Automatic Speech Recognition with Transformer Sequence-to-sequence Model,” ICASSP 2020-2020 IEEE Int. Conf. Acoust. Speech Signal Process., pp. 7074C7078, Oct . 2019, [Online]. Available: http://arxiv.org/abs/1910.10697. Build new, see wisdom – 2021 Amazon Cloud Technology AI Online conference April 22, 14:00-18:00 Why do so many machine learning loads choose Amazon Cloud Technology? How to achieve large-scale machine learning and enterprise digital transformation? “Building New · Seeing Wisdom-2021 Amazon Cloud Technology AI Online Conference” is led by Alex Smola, vice president of global artificial intelligence technology and outstanding scientist of Amazon Cloud Technology, and Gu Fan, general manager of Amazon Cloud Technology Greater China Product Department, and more than 40 heavyweights Guests will give you an in-depth analysis of the innovation culture of Amazon cloud technology in the keynote speech and 6 major conferences, and reveal how AI/ML can help companies accelerate innovation. Session 1: Amazon Machine Learning Practice Revealed Session 2: Artificial Intelligence Empowers Digital Transformation of Enterprises Session 3: The Way to Realize Large-scale Machine Learning Session 4: AI services help the Internet to innovate rapidly Session 5: Open Source and Frontier Trends Sub-venue 6: Intelligent ecology of win-win cooperation Which topic are you more interested in in the 6 major conference venues?

You must log in to post a comment.