Smart things (public account: zhidxcom)

Edit | Li Shuiqing Wisdom News reported on June 21 that the GTIC 2021 Embedded AI Innovation Summit recently concluded successfully in Beijing! At this high-profile AI industry summit with a full audience and more than 1.5 million live viewers on the entire network, 16 bigwigs from the upstream and downstream of the industry chain gathered together to focus on the software and hardware ecological innovation of embedded AI, home AIoT, Mobile robots and industrial manufacturing industry 4 major sections of the map, bringing in-depth and simple to share. At the meeting, Chen Chao, the R&D director of Jizhijia, gave a speech titled “Challenges and Innovations in the Visual Perception Technology of Logistics Robots”. The speech mainly consists of three parts: logistics and logistics robots, and the challenges and solutions faced by logistics robots in vision in two different scenarios: warehousing and industry. The logistics industry is a huge market, but the industry has long faced pain points such as difficult employment and rapid changes in market demand. Logistics robots came into being under such a background. At present, they have evolved into multi-scenario product forms such as smart sorting, smart handling, smart forklifts, smart warehousing, and so on, and have landed in many practical scenarios. Chen Chao recalled that, in the process of launching the logistics robot of Jizhijia, many challenges have been overcome in terms of visual perception technology. In the field of warehousing AMR mobile robots, the scenarios are relatively simple, mainly including picking from shelf to person and picking from box to person. For the ground two-dimensional code used for navigation, the team encountered problems such as defacement/reflection, motion blur, and demands for cost reduction. To this end, Gzhijia introduced V1.5 based on the fusion of ground patterns on the latest robots to make up for the problem of easy fouling of the QR code; further, Gzhijia’s V2.0 solution based on Marker-Net reduces the failure rate Reduce by two orders of magnitude. In the field of industrial AMR mobile robots, the scenarios and challenges are more complex and diverse. For example, in response to the problem of fewer samples in the industry, Gigabyte adopts a learning simulation program to quickly expand the sample; for the problem of unknown target detection, Gzhijia introduces a depth camera, which allows the deep learning model to organically combine deep data to improve target detection performance. For the problem with high robustness requirements, Gigabyte adopts a composite model method; for positioning problems in high dynamic scenarios, map update and semantic maps are used to ensure long-term stable operation of the robot; for end-side equipment with low computing power The problem is that Gigabyte uses algorithm optimization and acceleration engine to break through the limit of computing power. It can be seen that, as a logistics robot head company established in 2015, Jizhijia has overcome the hills of embedded AI development for specific application scenarios in warehousing and industry. We organize the record of Chen Chao’s speech into the following three parts: 1. The logistics industry accounts for over 10% of GDP, and logistics robots came into being Regarding logistics, in addition to express logistics that ordinary consumers often come into contact with, it also includes subdivided logistics scenarios such as warehousing logistics and factory logistics. Logistics is a very complex and complex industry, accounting for more than 10% of GDP. It can be said that logistics provides a guarantee for the supply of production and living materials for the entire society. In recent years, the logistics industry has faced challenges: the first is the difficulty of employing people. In fact, everyone around them can clearly feel the trend of aging and declining birthrate of the entire society over the years. The working-age population is gradually decreasing. At the same time, young people nowadays are more inclined to go to big cities to engage in service-oriented jobs. They are unwilling to go to factories and warehouses to do some low-level boring logistics jobs for logistics-related companies. Recruitment caused certain difficulties. On the other hand, the logistics industry is changing rapidly. With the development of the entire economy, residents’ consumption upgrades. At present, there are fewer and fewer large-scale standard industrialized products, and more are highly customized and personalized small-batch production. Now the product iteration speed is faster and the upgrades are more frequent. , To present new challenges to the supply chain. Logistics robots came into being in this context. Since logistics involves a wide range of areas, there will be different robots and solutions in different links and aspects. (Logistics robots) are mainly used in smart sorting in some express delivery fields, smart picking in e-commerce warehouses, as well as smart handling robots, smart forklifts on manufacturing production lines, smart warehouses and smart factories for integrated solutions… below, Let’s find out through specific robots. Jizhijia’s family portraits cover most of the categories of logistics robots. Including the low and latent picking robots and handling robots in the front row, as well as the sorting robots and bin picking robots in the middle, as well as the tall unmanned forklifts, man-machine collaborative robots, and composite robots with robotic arms in the back row.

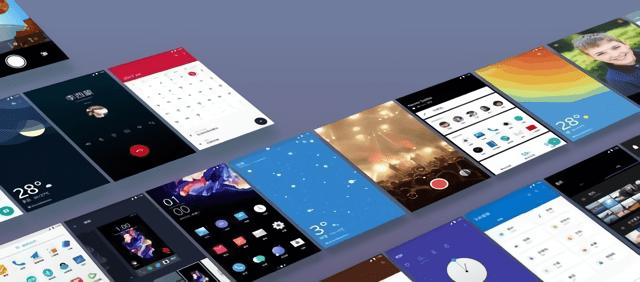

Behind the busy robots is a cloud-based system architecture. First of all, on the bottom side is the body of the robot, which contains software algorithms such as visual perception, positioning, and PNC; on the edge server side in the middle, running RMS is responsible for robot task scheduling and path planning; the top cloud deployment WMS, ERP The system is connected with the customer’s business. It is based on such a cloud side-end architecture that (we) guarantees the stable and efficient operation of logistics robots. 2. Warehousing scene: The accuracy of visual recognition needs to be improved, and there is a big demand for cost reduction After having a preliminary understanding of the logistics robot system, take a look at the challenges and responses of visual perception. First of all, in the warehousing scene, the current applications are: 1. Picking from shelf to person; 2. Picking from box to person. “Goods-to-person picking mode” has changed the traditional way of picking and choosing people to find goods-the RMS issues instructions, the robot runs to a specific location, and the corresponding shelf or specific bin is transported to the picking station. Picking is done manually. In this link, operators no longer need to shuttle shelves to find goods. The new picking mode greatly reduces the labor intensity of pickers, and at the same time significantly reduces the error rate of picking, so that the overall efficiency is improved by 2 to 3 times.

How is the picking robot positioned? Careful friends have already seen that in the video, some QR codes are densely gridded on the ground. A camera is installed at the bottom of the robot. The camera uses the camera to capture the QR code for positioning. This is similar to the principle of scanning codes on mobile phones. Since the QR code is deployed on the ground, there are some special features that are easy to be defaced. In the warehouse scene, there are often some trailer trucks in operation. The warehouse is regularly cleaned and maintained. Using a very powerful washing machine will cause damage to the QR code on the ground. On the other hand, the robot runs at a speed of more than 2 to 3 meters per second, the lens is very close to the ground, and the number of pixels that the object slides in a unit time is relatively large, and the image blur is more serious. In addition, low cost is also subject to fierce competition in the front-end market. The (market) puts forward a demand for cost reduction for picking robot sensors and computing chips. In response to the previous problems, we have made some iterations of technology and products. In the earlier version, a very low-cost heterogeneous SoC (System-on-Chip) was selected, and our traditional geometric feature-based algorithms were optimized and accelerated by FPGA, and finally achieved a relatively excellent performance-to-price ratio and performance-to-power ratio. Aiming at the problem of the contamination of the QR code, and also in order to reduce the deployment of the QR code in the scene, (we) introduced the ground pattern. If you look closely, you will find that the ground actually has very small textures, which are unique IDs, which can be used for positioning, which is similar to the code value on the QR code. Due to the particularity of the land pattern, (we) adopted a series of transformations to extract the global feature of the land pattern and establish a map location. Of course, the ground pattern has certain limitations, and it cannot be used for the epoxy floor of the factory and some high-gloss tile floors. (We) deploy a head-up camera on the front end of the robot, and use a neural network method Markernet on the back end to greatly expand the two-dimensional code detection capability on the latest robot. Previously, our camera was shooting downwards, and the field of view was very small. If the robot deviated a little, the positioning would be lost in a few centimeters or ten centimeters. Now relying on the front-view camera can detect the QR code in a larger range and perform relocation, which reduces the failure rate by two orders of magnitude. Many friends will ask, why use the network method for objects with obvious geometric features or artificially designed QR codes? Compared with the traditional mobile phone scanning code or the previous robot looking down the QR code detection method, now the QR code needs to be detected in a larger range and smaller angle to detect the fuzzy and contaminated QR code, based on the data-driven model method Exceeds the traditional method of artificially designing features. With the help of flexible camera deployment and back-end intelligent algorithms, the use of QR codes in the scene is greatly expanded, and it can be extended to side shelves or ceilings or almost anywhere in the scene. This is a test done in a logistics port container, which can detect and recognize the QR code in a very dark environment. 3. Industrial scenes: to deal with more complex scenes and establish simulation schemes In the industrial scenario, the challenges and responses faced by the visual perception of logistics robots, compared with the previously mentioned warehousing environment, involve more operations and a wider range. In the industrial scene, there are different types of robots in different scenes, including the roller handling robot on the left, lifting and handling, as well as robotic arm load robots and smart forklifts. In the industrial scenario, the requirements are diverse and the scenarios are complex, and different robots face different challenges when dealing with different tasks. Let’s take a look at it in detail.

First, there are few samples. Take compound robots as an example. In the end-grabbing process, they are faced with the detection and recognition of more than 100,000 commodities. Unlike applications such as unmanned vehicles, few in the industrial field can find corresponding ones on public data sets. Samples for our training. At the same time, industrial customers have certain requirements for data privacy, which also restricts the collection of some samples.

We adopt a simulation + learning program. Take pallets as an example. Pallets are vehicles that are widely used in the entire logistics scene. There are many types of pallets, including European standard, national standard and non-standard. There are various colors, shapes, sizes, materials, and even some customers. Take local materials and temporarily assemble them into pallet-like items with raw materials. Use the simulation engine to quickly build a single-target rendering, which can be rendered with different lighting, colors, and positions, and quickly expand our samples. For some applications, we can do full-scene simulation. Few Shots learning and Transfer Learning are used to quickly develop and adapt some new applications for some of the data that has been accumulated before in the industrial scene and the models that have been trained. Unknown target detection, there are many types of targets, some anomaly detection or obstacle detection can not exhaust the tested targets, we don’t know that the wrench or a part on the production line is dropped in front, or it may be a mouse or battery, based on the traditional The depth model method relies too much on the previous samples, and when faced with the detection of items that have never been seen before, the performance has a relatively large drop.

We introduce deep learning methods to organically combine depth data and RGB data for detection. There are many types of depth cameras, including Stereo camera, TOF camera, Structure Light camera, etc. Choose the corresponding sensor according to different detection distance and accuracy requirements. We use Stereo camera for obstacle detection. The output depth map and grayscale map are naturally aligned on the pixel level, which is very good for the further information fusion in the future. The depth-based detection method does not rely on the previously established model, and has a better detection effect for unseen targets. Our robot uses a very cheap depth sensor, which is not as high as a lidar. Accuracy. For low and small objects, the availability of depth data is greatly reduced. For very distant objects, the depth map degenerates into a 2D map in principle, which needs to be combined with RGB data for detection. In the industrial scenario, due to the requirements for safety and efficiency, the robustness of related equipment is very high. Take forklifts as an example. The load capacity of forklifts is very strong and the destructiveness is very large. Error detection will affect items and personnel. Caused very big damage, we adopt the method of compound model, combine deep learning method with domain knowledge through compound model. On the one hand, we quickly output the potential detection objects of the object. On the other hand, for pallet detection or certain bin detection, we know what the detection target is, we can use the prior model to perform a network verification to obtain robustness Higher accuracy and higher results.

Positioning in high-dynamic scenarios is different from warehouse robots based on two-dimensional codes on the ground. SLAM robots are used more in the industry. SLAM is a technology that uses sensors to observe the environment to establish a map and then locate it. This is very similar to the principle of human eyes observing the entire scene and positioning based on these visual landmarks. SLAM-based positioning has some advantages. There is no need to modify the scene, and there is no need to lay so many two-dimensional codes. It is more convenient to implement. The walking route is not gridded, but an arbitrary trajectory. Due to its flexibility, SLAM robots are more in line with the needs of customers in the flexible manufacturing industry and have been widely used in the manufacturing industry.

A very big challenge based on SLAM positioning is the problem of positioning loss in a high dynamic environment. For example, the bins on the production line will change with the change of work time. When the robot picks up the bins, there are still 10 bins. When it is put back, only 2 bins remain, and the scene changes If the robot fails to locate, it is equivalent to holding the old map to look at the way and find the way, and it will probably fail. We adopt a map update and semantic map solution. Based on the cloud-side end architecture just mentioned, each end-side robot is given the ability to detect changes. When it finds that the map does not match well, it uploads the data to the edge server. The edge end can integrate the data collected by multiple robots, judge based on the previous static reference map, perform map fusion update, and send the fused map to each robot, so that the robot can use the latest map for positioning. Semantic map detects some objects in the network and recognizes dynamic and static characteristics. For example, people and material trucks are movable and cannot be removed from the map as landmarks. For some heavy equipment, they are movable and the movement frequency is very low. Decrease its confidence in the map. The markings on the ground, walls, pillars, etc. are highly static objects, increase its confidence in the map. Compared with traditional feature point-based positioning, the accuracy of semantic target-based positioning may be slightly lower, but the robustness is very high. It can even partially or fully constrain the position of the entire robot based on one object in the entire scene. , So as to ensure the smooth operation of the robot. The challenge of low computing power is limited by a series of factors such as price, size, and power consumption. It is impossible for us to use high-performance computing chips on end-side devices. What we do is to optimize at the algorithm and software level. For the network model, optimization is performed on the numerical calculation level, the structure of the network itself, and the entire detection process. On the other hand, we use the supporting optimization and reasoning engines provided by major hardware manufacturers to optimize the deployment of our algorithms. Taking OpenVINO as an example, quantitative tuning and pruning are performed on the server side, and the optimized model is deployed on a low-computing computing platform through the IE engine. Intel’s OpenVINO can fully tap the entire processor, not only the CPU, but also other computing units, especially the computing power of the integrated graphics card, making full use of on-chip resources.

Let’s talk about it today. We look forward to working with colleagues and partners to discuss the application of visual AI technology in the logistics industry, and to build intelligent robots to make logistics easier. thank you all! The above is a complete compilation of Chen Chao’s speech.

You must log in to post a comment.