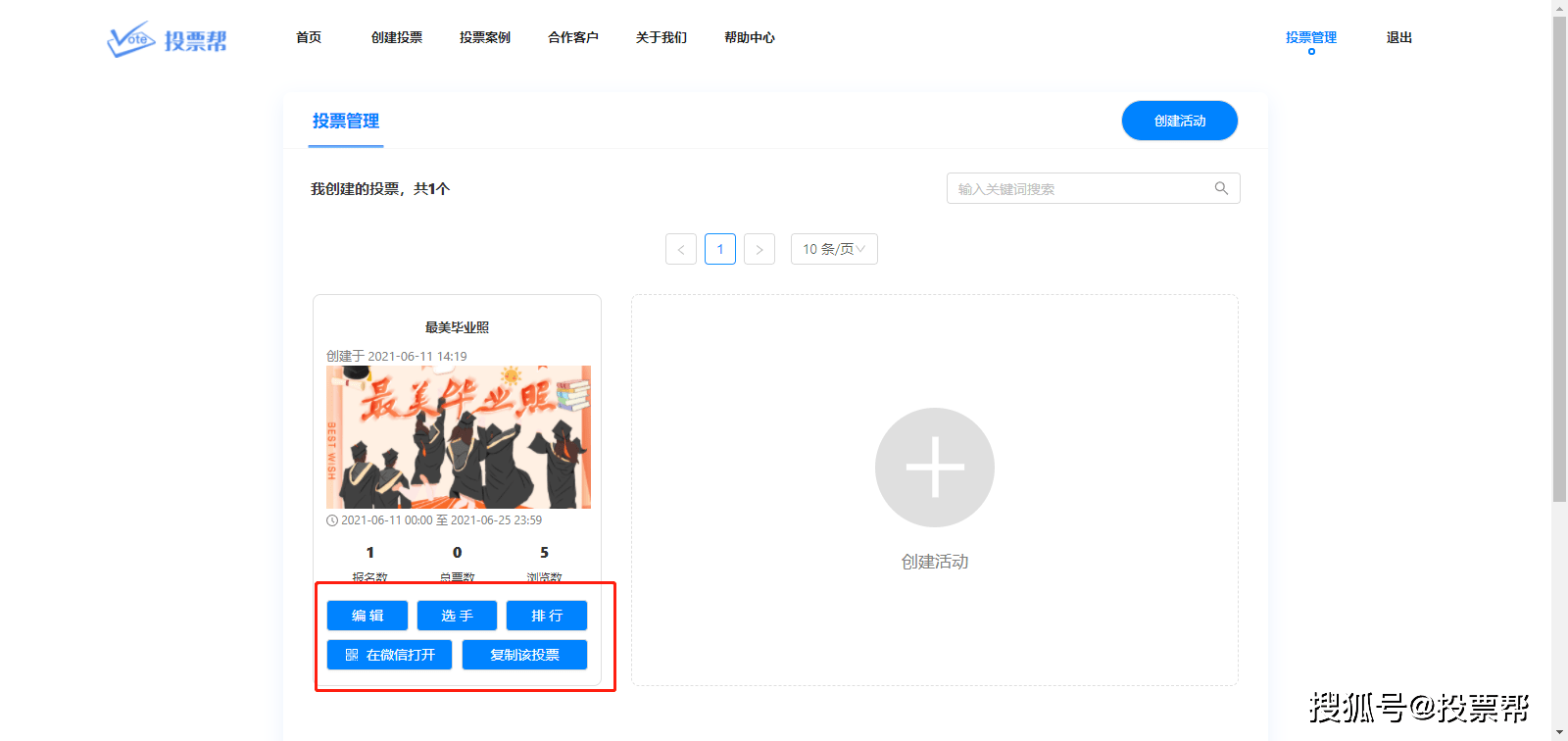

Data map. Image source: Unsplash Beijing News (Reporter Xiao Longping) On June 18, the Intelligent Media Research Center of the School of Journalism and Communication of Tsinghua University and the Xinjing Think Tank jointly held an online forum on “Algorithms Make Good Use of Intelligent Humanities: Multidisciplinary Integration of Intelligent Information”. Experts and scholars from the School of Journalism and Communication of Tsinghua University, the School of Journalism and Communication of Beijing Normal University, the School of Journalism of Fudan University, the School of Philosophy of Renmin University of China, the School of Journalism and Communication of China Communication University, and the School of Communication of Shenzhen University, discuss the value creation and social development of algorithm technology The positive significance of the discussion. The algorithm of “Top of the Storm” has opened up people’s cognition, psychology and thinking paths In recent years, the term “algorithm” has appeared in the public eye with an unprecedented frequency. Some algorithm-related events have aroused attention and heated discussions in our country and even the world, and algorithms have become a focus of attention on the “tip of the storm”. However, from the academic discussion of mathematics to computer programming languages, algorithms cannot be regarded as a new concept. According to Yu Guoming, former dean of the School of Journalism and Communication of Beijing Normal University and a Changjiang scholar, the reason why “algorithms” are suddenly hot is because the rapid development of information dissemination technology has created an “algorithmic” digital economy and a new society of information dissemination. As the foundation of the digital economy, algorithms are moving from “behind the scenes” to “foreground”. Jiang Qiaolei, associate professor of the School of Journalism and Communication of Tsinghua University and deputy director of the Intelligent Media Research Center, believes that algorithm technology may not only be used as a tool to greatly enhance and change the depth and breadth of the influence of past media effects, but also open up people’s recognition. Knowledge, psychology and thinking path. For example, some scholars studying memory have found that after using smart media, people’s memory method has changed from the original content of specific information to the path of memory to obtain information, that is, how to find this information. That is, people no longer accurately remember the specific content of the information, but remember where the information content can be found. Once needed, the specific content of the information is retrieved through the search engine, and the algorithm facilitates the channel of information acquisition. Facing ethical dilemmas and improving algorithm transparency While the algorithm brings convenience to human life, it also exposes some contradictions between technology and humans, technology and humanities, including contradictions in values and ethical dilemmas. Zhang Taofu, executive dean of the School of Journalism at Fudan University and a Changjiang scholar, said that the biggest contradiction at present seems to be magnified by technology. What is the biggest contradiction? The contradiction between the finiteness of our cognition and the overproduction and overload of information. In the technological age, people’s cognitive ability has not been doubled, but the amount of information possessed has increased exponentially. So, “How can I find the content I need in a limited time with such a large amount of information? The subject itself cannot solve it. It must provide a solution for us through technology and algorithms,” Zhang Taofu said. At the same time, worries about the “information cocoon room” followed one after another. American scholar Cass R. Sunstein put forward the concept of “information cocoon room” in a political context. In our country, this concept has been misunderstood and abused. For example, there is a view that continuous algorithmic recommendation will keep users in a similar information field created by personal interests for a long time, forming a “cocoon room”, and rejecting other reasonable opinions from intruding. So, does the “information cocoon room” really exist? Chen Changfeng, executive vice dean and professor of the School of Journalism and Communication of Tsinghua University, believes that if people live in a pure environment where only homogeneous information pushed by algorithms and only obtain one type of information, it is possible to form a “cocoon house.” But in fact, the information environment of our era is diverse and rich, and content with inconsistent views cannot be avoided. The current diverse information environment is a paradox formed by the “information cocoon room”. Data map. Image source: Unsplash The “information cocoon room” is just a worry. Chen Changfeng introduced that the information aggregation platform can enhance the diversity of information by optimizing the technical model of the algorithm. This provides the conditions for individuals to obtain richer information, and also stimulates the user’s subjective initiative to become a “active audience”. It can be seen that media literacy education is also a way to resolve the misunderstanding of the “cocoon room”. Jiang Qiaolei agreed that when the transparency of the algorithm is increased and a wider range of users can participate in it, it will naturally reduce the worry about the algorithm. Make good use of algorithms and guide technological development with social ethics In the past historical and cultural context, the ethics of algorithm technology has not been included in the scope of thinking. Jiang Qiaolei pointed out that in a society where people are the main body, the legal level of regulation and the expectations of moral concepts have been fully and maturely discussed, but the communication ethics and media ethics with algorithms as the main body need to be re-examined: Some legal norms and existing moral concepts, adapt to and accept it. According to Professor Zhang Xiao, deputy dean and professor of the School of Philosophy, Renmin University of China, our use of algorithms should be limited, because artificial intelligence built by algorithms is actually limited intelligence, not human intelligence. At the same time, algorithms are just tools, and all the responsibilities cannot be shifted to algorithms. A limited agent constructed by algorithms should not bear any moral responsibility, because at the ethical level, it has no autonomy, self-discipline, self-control, and self-awareness, and it is not a moral subject. Zhang Xiao believes that the entire evaluation and ethical review of the values in the design and operation of the algorithm will help to think about the ethical issues that may be involved in the design, operation and application of the algorithm, and the ethical issues that may arise from these ethical issues. Some of the moral consequences of this, and then let the public have a certain sensitivity in advance. Zhang Taofu said that technology is now in the front, people in the middle, and regulations in the back. Therefore, it is necessary to strengthen the regulation of algorithms and other technologies, and only the mechanism generation can guide the behavior of the public. Earlier, the European Union’s “Ethical Guidelines for Trusted Artificial Intelligence” proposed the principle of making good use of algorithms. The future of intelligent algorithms is to understand ethical algorithms. In the algorithm development process, a cooperative “algorithm development mechanism” is adopted, that is, stakeholders are involved in the development of algorithms, so as to form an algorithm governance model in which stakeholders participate in decision-making. Professor Chao Naipeng, dean and professor of the School of Communication of Shenzhen University, believes that algorithms involving multiple stakeholders can effectively manage ethical issues such as the so-called “black box” of algorithms to a certain extent. The cultivation of users’ digital literacy is a way to solve algorithm concerns. After users understand the operating logic of the technology itself, they can get rid of the “black box” from the epistemological perspective. Chang Jiang, a distinguished professor of the School of Communication of Shenzhen University and the executive director of the Center for Media Convergence and International Communication, mentioned, “Even if some things can never be made transparent, But I can realize what the “black” logic is.” Qiu Junqian, an associate professor at the School of Journalism and Communication of Communication University of China, emphasized that finding and understanding the boundary objects in the intelligent ecology, planning and predicting the technical logic and ethical logic behind them will help open the “black box” and allow technology to better serve people. “We don’t need to worry about it, simply and rudely hit the algorithm to the bottom, and blame it for the original sin.” Chen Changfeng said that technology helps the public to be surrounded by sufficient information and multi-faceted choices to pursue their own preferences and gain more. To communicate more, use new technology with a positive attitude, and let it serve mankind. Serving social groups with algorithmic information is the proper meaning of science and technology. Today, Toutiao has launched the “Toutiao Searching for People”, and Douyin has launched the “Sunflower Project” to ensure that minors go online. The Internet and other platforms are also following closely. Combine algorithm technology with social development to serve the public. “We need to implement well-intentioned advocacy and rational criticism into the effective development of algorithms, and use social demands to promote technological innovation. This is the ultimate goal of making good use of algorithms,” said Chao Naipeng. Reporter: Xiao Longping Editor: Zhang Xiaoyuan Proofreading: Li Lijun

EDITOR PICKS

Spress.net is a general newspaper in English which is updated 24 hours a day

Contact us: [email protected]

© Spress.net

You must log in to post a comment.