Heart of the Machine Report

Author: boat, Chen Ping

A new architecture called MobileStyleGAN greatly reduces the number of parameters based on style GAN and reduces computational complexity.

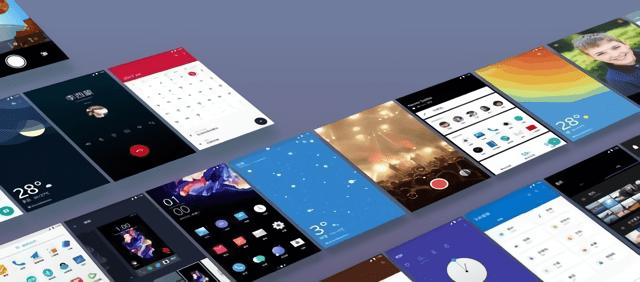

In recent years, in generative image modeling, there have been more and more applications of generative confrontation networks (GAN). Style-based GAN can generate different levels of detail, ranging from the shape of the head to the color of the eyes. It implements SOTA in high-fidelity image synthesis, but the computational complexity of the generation process is very high. It is difficult to apply to mobile devices such as smart phones.

Recently, a study focusing on the performance optimization of style-based generative models has attracted everyone’s attention. This research analyzes the most difficult computational part of StyleGAN2 and proposes changes to the generator network, making it possible to deploy a style-based generation network in edge devices. The research proposed a new architecture called MobileStyleGAN.Compared with StyleGAN2, the amount of parameters of this architecture is reduced by about 71%, the computational complexity is reduced by about 90%, and the quality of the generation is almost unchanged.

Comparison of the generation effect of StyleGAN2 (top) and MobileStyleGAN (bottom).

The author of the paper has put the PyTorch implementation of MobileStyleGAN on GitHub.

Paper address: https://arxiv.org/pdf/2104.04767.pdf

Project address: https://github.com/bes-dev/MobileStyleGAN.pytorch

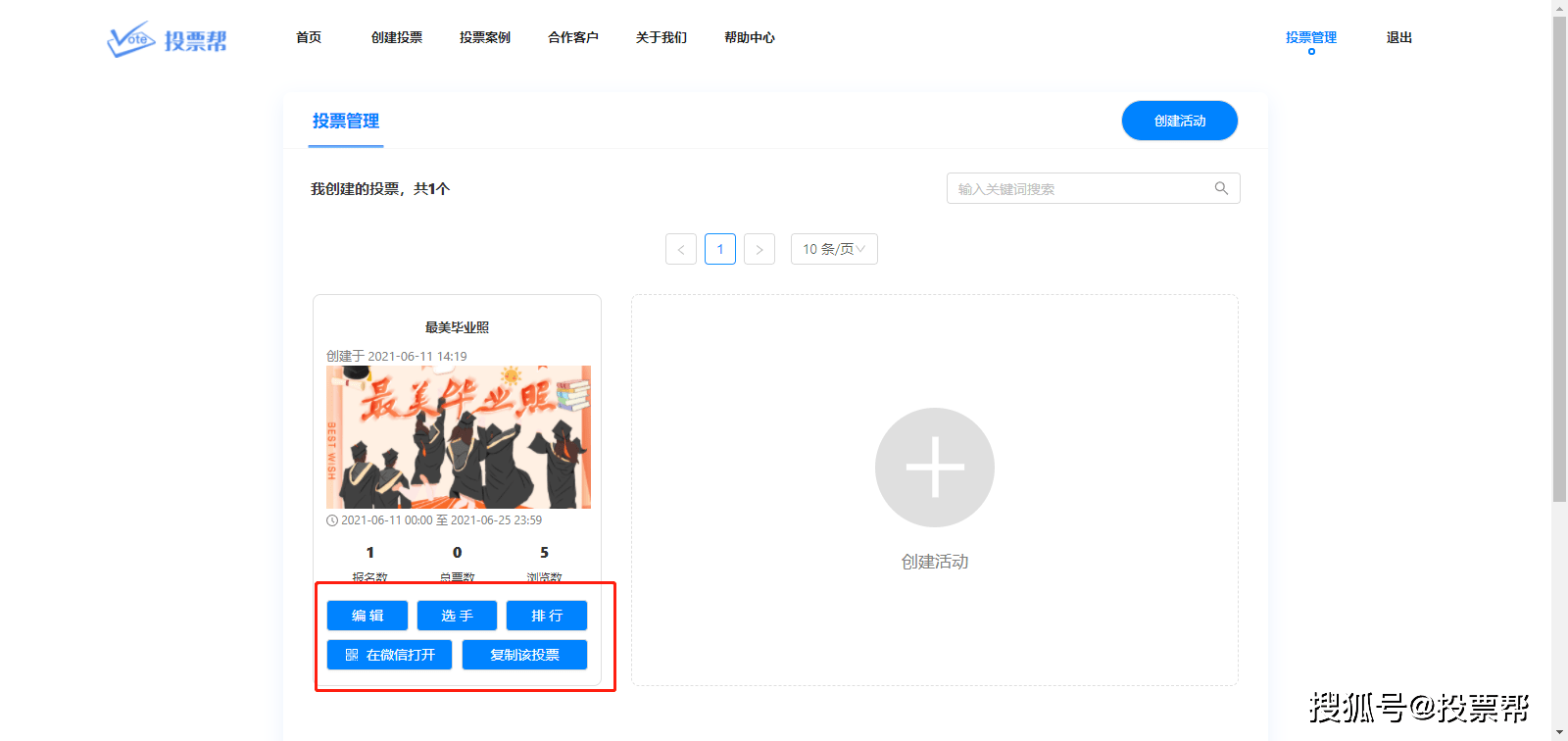

The training code required for this implementation is very simple:

The effect of StyleGAN2 (left) and MobileStyleGAN (right) is shown.

Let’s take a look at the details of the MobileStyleGAN architecture.

MobileStyleGAN architecture

The MobileStyleGAN architecture is built on the basis of the style generation model. It includes a mapping network and a synthesis network. The former uses the mapping network in StyleGAN2. The focus of this research is to design a computationally efficient synthesis network.

The difference between MobileStyleGAN and StyleGAN2

StyleGAN2 uses pixel-based image representation and aims to directly predict the pixel value of the output image. MobileStyleGAN uses frequency-based image representation to predict the discrete wavelet transform (DWT) of the output image. When applied to 2D images, DWT converts the channels into four channels of the same size, which have lower spatial resolution and different frequency bands. Then, the inverse discrete wavelet transform (IDWT) reconstructs the pixel-based representation from the wavelet domain, as shown in the figure below.

StyleGAN2 uses a skip-generator to form an output image by explicitly summing the RGB values of multiple resolutions of the same image. The study found that when the image is predicted in the wavelet domain, the prediction head based on skip connection has little effect on the quality of the generated image. Therefore, in order to reduce the computational complexity, this study replaces the long jump generator with a single prediction head of the last block in the network. But predicting the target image from the middle block is of great significance for stable image synthesis. Therefore, this research adds an auxiliary prediction head to each intermediate block and predicts it according to the spatial resolution of the target image.

The prediction head difference between StyleGAN2 and MobileStyleGAN.

As shown in the figure below, modulation convolution includes modulation, convolution and normalization (left). The depth separable modulation convolution also includes these parts (middle). StyleGAN2 describes modulation/demodulation for weights, and the study applies them to input/output activations respectively, which makes it easier to describe depth separable modulation convolution.

The StyleGAN2 building block uses ConvTranspose (left in the figure below) to upscale the input feature map. The research uses IDWT as the upscale function in the MobileStyleGAN building block (right in the figure below). Since IDWT does not contain trainable parameters, this study adds additional depth separable modulation convolution after the IDWT layer.

The complete building block structure of StyleGAN2 and MobileStyleGAN is shown in the figure below:

Distillation-based training process

Similar to some previous studies, the training framework of this study is also based on knowledge distillation technology. The research uses StyleGAN2 as a teacher network and trains MobileStyleGAN to imitate the functions of StyleGAN2. The training framework is shown in the figure below.

Build new, see wisdom – 2021 Amazon Cloud Technology AIOnline conference

April 22, 14:00-18:00

Why do so many machine learning loads choose Amazon Cloud Technology? How to achieve large-scale machine learning and enterprise digital transformation?

“Building New · Seeing Wisdom-2021 Amazon Cloud Technology AI Online Conference” is led by Alex Smola, vice president of global artificial intelligence technology and outstanding scientist of Amazon Cloud Technology, and Gu Fan, general manager of Amazon Cloud Technology Greater China Product Department, and more than 40 heavyweights Guests will give you an in-depth analysis of the innovation culture of Amazon cloud technology in the keynote speech and 6 major sub-venues, and reveal how AI/ML can help companies accelerate innovation.

Session 1: Amazon Machine Learning Practice Revealed

Session 2: Artificial Intelligence Empowers Digital Transformation of Enterprises

Session 3: The Way to Realize Large-scale Machine Learning

Session 4: AI services help the Internet to innovate rapidly

Session 5: Open Source and Frontier Trends

Sub-venue 6: Intelligent ecology of win-win cooperation

Which topic are you more interested in in the 6 major conference venues?

You must log in to post a comment.