Recently, electric car company Tesla announced it will abandon radar and rely entirely on camera vision for autonomous driving systems.

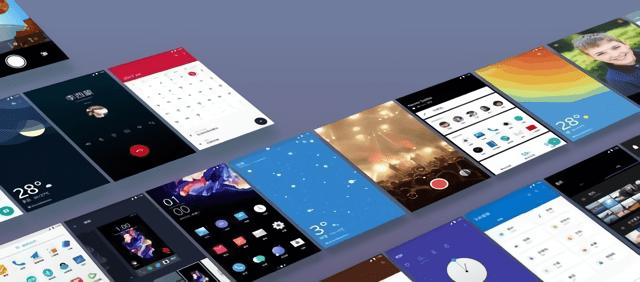

Tesla ditches radar sensors on self-driving systems. Photo: Getty Images No company in the auto industry can attract as much attention as Tesla, on the one hand because of CEO Elon Musk’s controversial statements, on the other hand, because Tesla regularly makes “unusual” decisions. “. At the end of May, Tesla once again became the focus of public opinion, and this time not because of a “brake failure”, but a configuration change. Recently, Tesla announced that starting in May, Model 3 and Model Y in North America will no longer be equipped with sensor radar, cancel the millimeter wave radar in front of the vehicle and only use the camera as a receiver. data collection of automatic driving systems. This means that Tesla is poised to be the first car company to deploy a “pure vision” autonomous driving solution. Meanwhile, other self-driving car manufacturers are integrating radar, LiDAR and cameras in autonomous driving systems. In fact, Tesla CEO Elon Musk has always been adamant against LiDAR, even repeatedly criticizing LiDAR in public. He thinks this is an expensive, unnecessary technology. Even Elon Musk has called those who believe in LiDAR technology “stupid” and car manufacturers using LiDAR technology will never achieve success. So, why is Musk so adamantly “disliked” LiDAR and even all radars, and only favors a camera technology solution? To clear this up, we may need a basic understanding of autopilot schematics. Camera and LiDAR To build a set of automated driving system solutions, the automatic driving algorithm is of course inseparable. In order to achieve calculations, sufficient information is required first. During driving, the vehicle’s information collection source is the large and small sensors on the body of the vehicle. Among them, to realize autonomous driving, the most important sensors are the camera and LiDAR (must add millimeter wave radar and ultrasonic sensor, of course). Therefore, the autonomous driving system is divided into camera-based vision array and LiDAR array. “Camera-based vision” uses a high-definition camera incorporating an image recognition algorithm, while LiDAR to ensure system stability. The camera array, or pure visual diagram, is essentially a complete simulation of the state of a person driving a car, first seeing the image with the “eye (camera)”, then transmitting it to the “brain (processor)” for processing and judgment, and then give the action “Legs (steering mechanism)”. In addition to Tesla, Chinese companies including Jikrypton and Baidu have also adopted pure vision solutions. LiDAR technology uses a laser to measure distances and build a 3D map of an object, by emitting and receiving the laser’s feedback and then analyzing that data to produce the desired results. Many Chinese car companies including Xiaopeng, Weilai, BAIC ARCFOX, and many other new products also announced this solution. Which solution is better? The advantage of the “pure vision” solution is that the video data captured by the camera is the closest to the real world perceived by the human eye, and also the closest to the form of human driving. At the same time, with LiDAR prices currently very high, a pure vision solution using only the camera should be easier to control costs.  Tesla’s pure vision algorithm analysis is already very strong. In addition, the image obtained by the camera is well suited for object identification and classification, in case the algorithms are excellent, it can remove confounding items and enable automatic learning during driving. car. However, as a visual algorithm similar to the human eye, the camera is easily affected by light, in harsh weather or poor lighting conditions, it is easy to cause misjudgment. Previously, when AutoPilot was turned on, Tesla models accidentally braked automatically when going over an overpass or under a normal bridge, due to the algorithm using a sudden ball in the camera as an obstacle.

Tesla’s pure vision algorithm analysis is already very strong. In addition, the image obtained by the camera is well suited for object identification and classification, in case the algorithms are excellent, it can remove confounding items and enable automatic learning during driving. car. However, as a visual algorithm similar to the human eye, the camera is easily affected by light, in harsh weather or poor lighting conditions, it is easy to cause misjudgment. Previously, when AutoPilot was turned on, Tesla models accidentally braked automatically when going over an overpass or under a normal bridge, due to the algorithm using a sudden ball in the camera as an obstacle.  Rendering a 3D environment map drawn by LiDAR Compared with pure vision solution, the advantages of LiDAR solution are longer monitoring distance, higher accuracy, more sensitive response speed and unaffected by ambient light. LiDAR’s three-dimensional information processing, object size calculation, and movement speed are more efficient. However, LiDAR has problems such as weak object recognition, high cost, and accuracy in extreme weather conditions such as rain and snow. Therefore, LiDAR must be used in conjunction with other sensors to ensure safe driving. In addition, the collection of large amounts of data requires high computing power of the processor, which equates to an increase in the cost of the media processor. Theoretically, the combination of camera-based vision and LiDAR is a relatively complete solution today, but after combining many factors such as existing technology and product cost, it is too difficult to use. use a “third solution” that integrates these two major solutions. Tesla’s trade-off

Rendering a 3D environment map drawn by LiDAR Compared with pure vision solution, the advantages of LiDAR solution are longer monitoring distance, higher accuracy, more sensitive response speed and unaffected by ambient light. LiDAR’s three-dimensional information processing, object size calculation, and movement speed are more efficient. However, LiDAR has problems such as weak object recognition, high cost, and accuracy in extreme weather conditions such as rain and snow. Therefore, LiDAR must be used in conjunction with other sensors to ensure safe driving. In addition, the collection of large amounts of data requires high computing power of the processor, which equates to an increase in the cost of the media processor. Theoretically, the combination of camera-based vision and LiDAR is a relatively complete solution today, but after combining many factors such as existing technology and product cost, it is too difficult to use. use a “third solution” that integrates these two major solutions. Tesla’s trade-off  The cost of LiDAR is much higher than high quality cameras. In fact, abandoning LiDAR, and even now abandoning millimeter wave radar, one of the key reasons Tesla made such a choice was to achieve lower costs. After all, the cost of LiDAR is very high, the price of this technology in the early days started at 100,000 USD, but now with the development of the electronics industry, its price has decreased but basically remains the same. maintained at several tens of thousands of dollars. However, compared to high-definition cameras, the price of only a few hundred dollars is still much higher. However, besides cost, Tesla also has technical trade-offs. First of all, LiDAR does not determine the specific nature of the object, so it is easy to cause wrong judgment. For example, if a large plastic bag appears in front of the vehicle, the camera solution may recognize it as a plastic bag and ignore it, but LiDAR will judge it as an obstruction and stop the vehicle. Second, millimeter wave radar also has many limitations. The detection range of millimeter wave radar is directly limited if the band is weak. It cannot identify pedestrians nor accurately model all surrounding obstacles. At the same time, due to the large amount of data obtained, it will also consume precious computing power of the processor. Finally, for Tesla, the best company in imaging solutions, they have invested heavily in imaging algorithms. To give up the large accumulation so far, and turn to another path, in terms of input – output ratio, it can be said that it is not worth it. Tesla is loyal to a completely camera-based vision solution

The cost of LiDAR is much higher than high quality cameras. In fact, abandoning LiDAR, and even now abandoning millimeter wave radar, one of the key reasons Tesla made such a choice was to achieve lower costs. After all, the cost of LiDAR is very high, the price of this technology in the early days started at 100,000 USD, but now with the development of the electronics industry, its price has decreased but basically remains the same. maintained at several tens of thousands of dollars. However, compared to high-definition cameras, the price of only a few hundred dollars is still much higher. However, besides cost, Tesla also has technical trade-offs. First of all, LiDAR does not determine the specific nature of the object, so it is easy to cause wrong judgment. For example, if a large plastic bag appears in front of the vehicle, the camera solution may recognize it as a plastic bag and ignore it, but LiDAR will judge it as an obstruction and stop the vehicle. Second, millimeter wave radar also has many limitations. The detection range of millimeter wave radar is directly limited if the band is weak. It cannot identify pedestrians nor accurately model all surrounding obstacles. At the same time, due to the large amount of data obtained, it will also consume precious computing power of the processor. Finally, for Tesla, the best company in imaging solutions, they have invested heavily in imaging algorithms. To give up the large accumulation so far, and turn to another path, in terms of input – output ratio, it can be said that it is not worth it. Tesla is loyal to a completely camera-based vision solution  Tesla Vsion camera system. On Tesla’s official North American website, during the introduction of Full Self-Driving FSD, the performance information of the millimeter wave radar was removed and only the image sensor and ultrasound. At the same time, Tesla also released the driver monitoring system on May 28. The Model 3 and Model Y vehicle cameras can monitor the driver’s behavior when the Autopilot system is activated, detecting and reminding the driver to keep his or her attention. Tesla states that “camera data will be stored in the vehicle, and the system will not send the data back to the company unless the user gives permission to share the data”. In addition, Tesla’s FSD V9.0 autopilot system will also be officially launched this year. According to Musk’s earlier statement, the FSD algorithm has been “refactored” to achieve pure vision through 8 cameras around the body, while effectively solving the previous “brake failure” problem. Tesla also warned that Autopilot and FSD systems would be less effective during this technical adjustment period. “During a short period of transition, Tesla Vision vehicles may experience some limitations or inactivity, such as Autosteer being limited to a top speed of 120.7 km/h. , Smart Summon (if equipped) and Emergency Lane Avoidance may be disabled during delivery,” Tesla’s announcement reads. Whether LiDAR will win the market with a secure advantage in the future, or a camera-based vision algorithm dominates the market with a lower cost advantage, the answer is still unclear. However, judging from the information currently available, Tesla will choose to stick with a pure vision solution.

Tesla Vsion camera system. On Tesla’s official North American website, during the introduction of Full Self-Driving FSD, the performance information of the millimeter wave radar was removed and only the image sensor and ultrasound. At the same time, Tesla also released the driver monitoring system on May 28. The Model 3 and Model Y vehicle cameras can monitor the driver’s behavior when the Autopilot system is activated, detecting and reminding the driver to keep his or her attention. Tesla states that “camera data will be stored in the vehicle, and the system will not send the data back to the company unless the user gives permission to share the data”. In addition, Tesla’s FSD V9.0 autopilot system will also be officially launched this year. According to Musk’s earlier statement, the FSD algorithm has been “refactored” to achieve pure vision through 8 cameras around the body, while effectively solving the previous “brake failure” problem. Tesla also warned that Autopilot and FSD systems would be less effective during this technical adjustment period. “During a short period of transition, Tesla Vision vehicles may experience some limitations or inactivity, such as Autosteer being limited to a top speed of 120.7 km/h. , Smart Summon (if equipped) and Emergency Lane Avoidance may be disabled during delivery,” Tesla’s announcement reads. Whether LiDAR will win the market with a secure advantage in the future, or a camera-based vision algorithm dominates the market with a lower cost advantage, the answer is still unclear. However, judging from the information currently available, Tesla will choose to stick with a pure vision solution.

You must log in to post a comment.